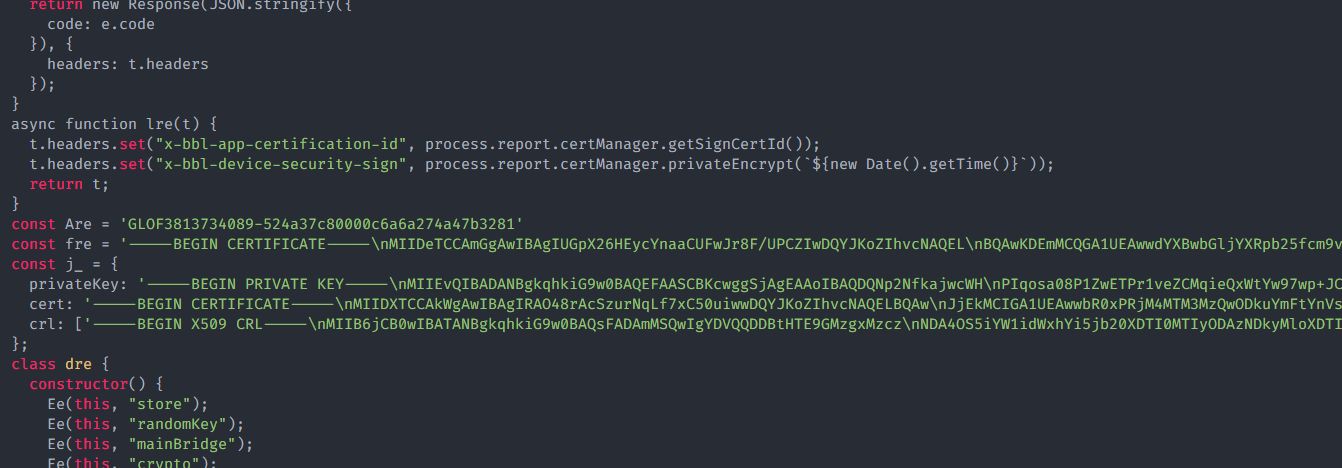

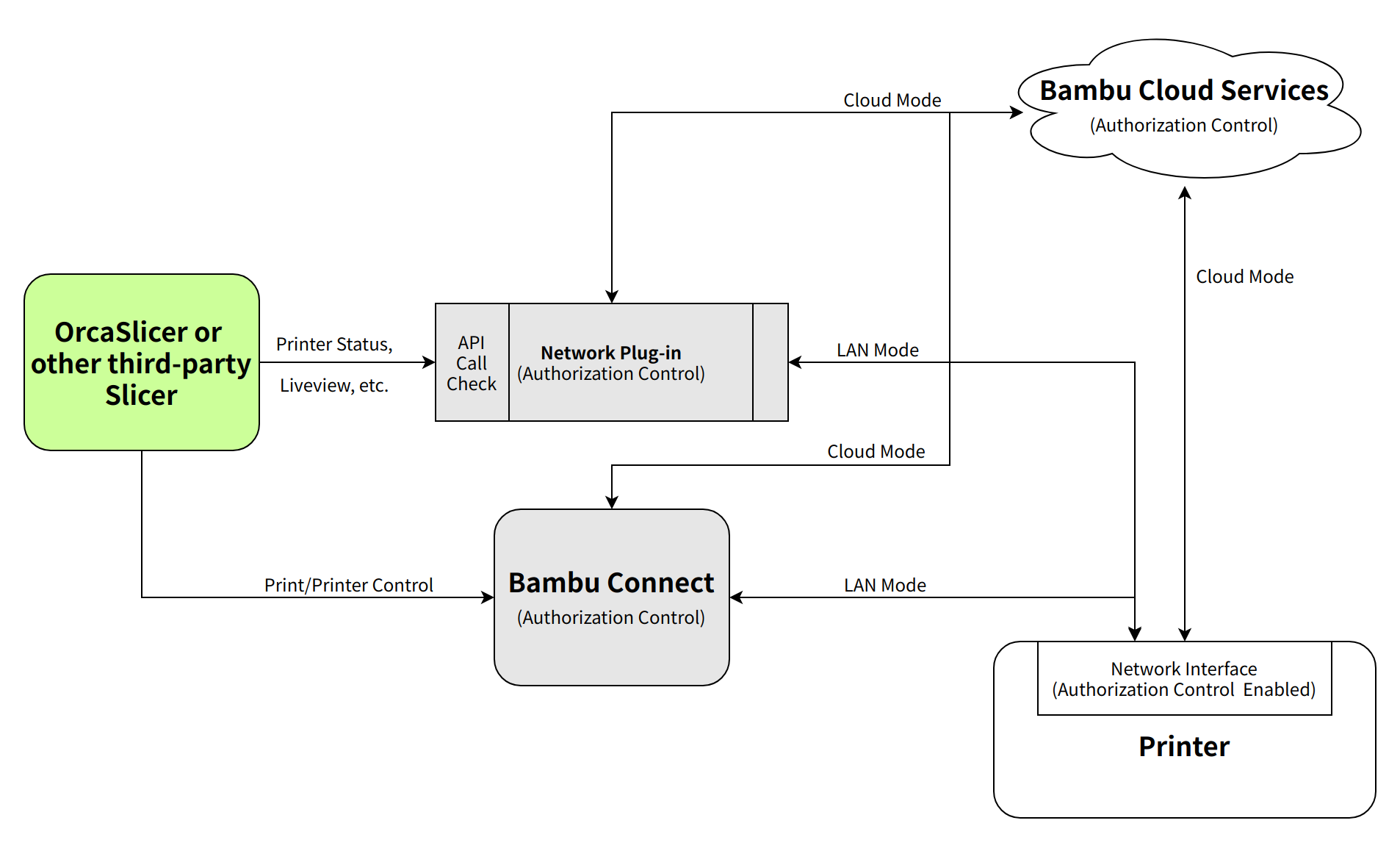

Hot on the heels of Bambu Lab’s announcement that it would be locking down all network access to its X1-series 3D printers with new firmware, the X.509 certificate and private key from the Ba…

Maya Posch is a journalist for Hackaday, specializing in technology and electronics. With a passion for reverse-engineering and exploring the inner workings of various devices, Maya's articles provide in-depth insights and practical solutions for the tech-savvy audience.